On July 12, 2024, the European Union’s long-awaited Artificial Intelligence Act (AI Act) was finally published. It will enter into force on the twentieth day following its publication; i.e., on August 1, 2024. The AI Act is a landmark legal framework that imposes obligations on both private and public sector actors that develop, import, distribute, or use in-scope AI systems.

1) What is in scope of the AI Act?

Most of the AI Act’s provisions govern “AI systems,” which are defined as machine-based systems:

- Designed to operate with varying levels of autonomy.

- That may exhibit adaptiveness after deployment.

- That infer, from the input they receive, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

The definition is fairly broad and has attracted debate over its exact boundaries. The recitals to the AI Act provide some additional clarification, including by specifying that AI systems should be distinguished from traditional software systems or programming approaches, as well as from systems that are based on rules defined solely by individuals to automatically execute certain operations.

The AI Act also includes (limited) provisions that apply only to “general-purpose AI models,” which are defined as AI models that display significant generality, are capable of competently performing a wide range of distinct tasks, and can be integrated into a variety of downstream systems or applications. An example of a general-purpose AI model is OpenAI’s GPT-4.

To determine if the AI Act is relevant to your organization, it will be essential to determine whether a particular AI-powered tool, product, or model is covered by the definition of AI system or general-purpose AI model in the AI Act.

2) Who is impacted by the AI Act?

The AI Act impacts various stakeholders and makes a key distinction between providers and deployers of AI systems:

- The provider is typically the company that develops an AI system or general-purpose AI model and places it on the EU market under its own name or trademark. The provider is in many respects the main target of the AI Act’s obligations.

- The deployer is typically the company that uses an AI system for its professional activities. Deployers are subject to comparatively fewer – and less onerous – obligations under the AI Act.

Obligations also attach to importers and distributors of AI systems, as well as authorized representatives appointed by certain providers of AI systems or general-purpose AI models established outside the EU. In addition, individuals will be impacted by the AI Act – notably because they will in some cases be granted the ability to exercise a new right to receive an explanation of decision-making that involves certain high-risk AI systems and affects them individually.

3) At a high level, how does the AI Act regulate AI systems, practices, and models?

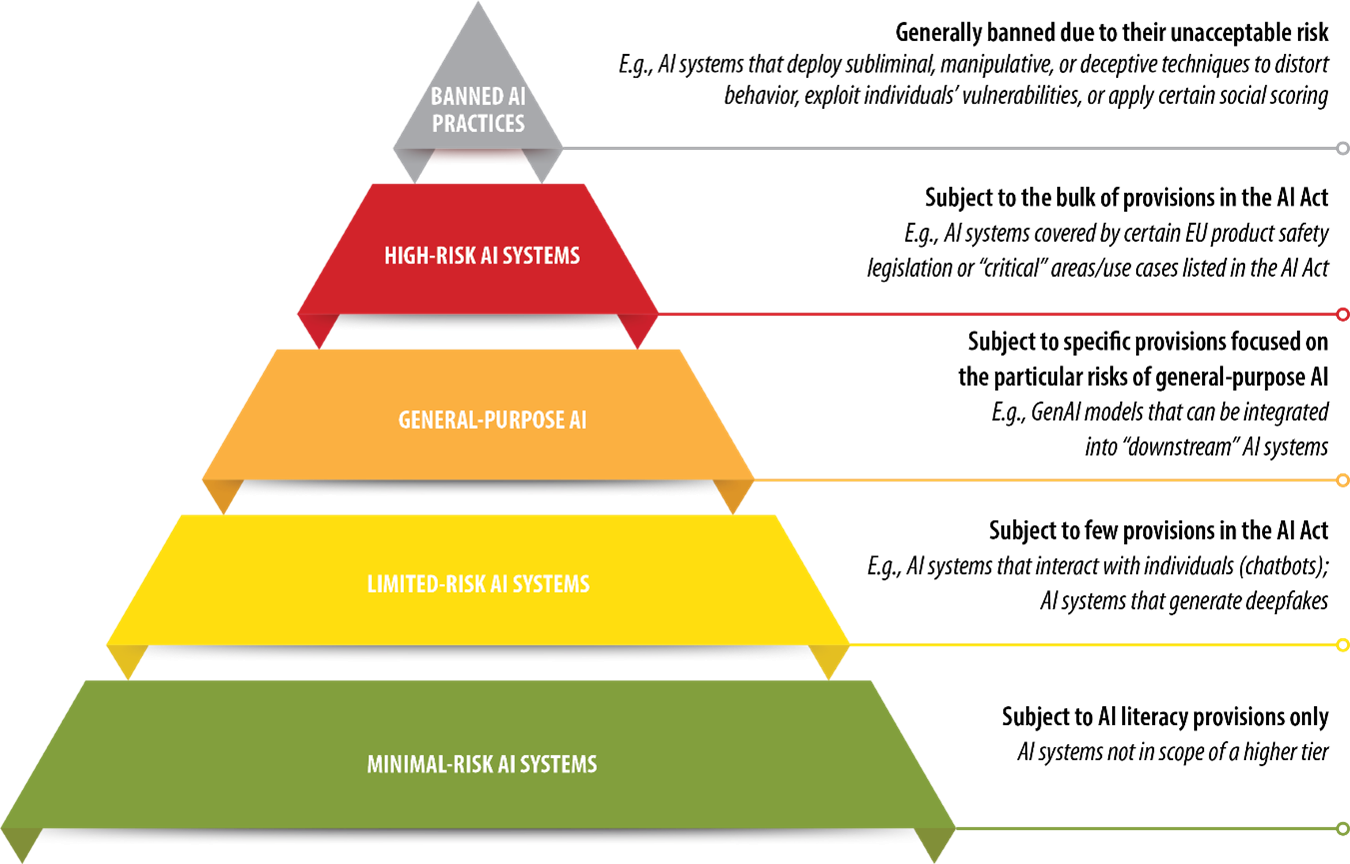

The AI Act takes a risk-based approach to regulation, which means that the AI Act imposes different rules depending on the risk (to individuals and society) associated with the placing on the EU market or use of an AI system or general-purpose AI model. The higher the risk, the more stringent the applicable rules. At a high level, the following risk “categories” or “tiers” can be distinguished (although there is a level of overlap between them):

4) Which AI practices are banned?

The AI Act bans certain AI systems and practices due to the unacceptable risks that they pose to individuals and society as a whole. These AI systems and practices:

- Deploy subliminal, manipulative, or deceptive techniques to distort behavior.

- Exploit individuals’ vulnerabilities (due to age, disability, or social or economic situation) to distort their behavior.

- Apply social scoring in an out-of-context, unjustified, or disproportionate way.

- Assess or predict the likelihood of an individual committing a crime by profiling them.

- Create or expand facial recognition databases through the untargeted scraping of facial images from the internet or CCTV footage.

- Infer emotions of an individual in the workplace or education institutions.

- Use biometric categorization systems to deduce race, political opinions, trade union membership, and other sensitive attributes.

- Use real-time remote biometric identification in public spaces for law enforcement purposes.

The AI Act includes limited exceptions to these prohibitions, particularly in law enforcement contexts.

5) What does the AI Act say about high-risk AI systems?

The AI Act includes two categories of AI systems that are considered to be high-risk AI systems (HRAIS) and therefore subject to rigorous requirements and restrictions:

- AI systems falling under certain EU product safety laws. These are AI systems that are products (or are used as safety components of products) covered by specific EU product safety laws listed in Annex I to the AI Act. The laws listed in Annex I include EU Directives and Regulations covering safety requirements for machinery, toys, radio equipment, motor vehicles, medical devices, and equipment/systems for use in explosive atmospheres.

- AI systems falling within certain high-risk use cases (also known as “stand-alone” HRAIS). AI systems falling within one of the critical use cases and areas set out in Annex III to the AI Act are presumed to be HRAIS. These include certain AI systems used for:

- Biometric identification and categorization of individuals.

- Emotion recognition.

- Management and operation of certain (critical) infrastructure.

- Purposes relating to employment, worker management, and access to self-employment (including in particular recruitment, selection, promotion, termination, allocation of tasks, and monitoring of behavior).

- Risk assessment and pricing for life and health insurance.

HRAIS are subject to a litany of detailed requirements. For example:

- A risk management system must be established, implemented, documented, and maintained throughout the life cycle of the HRAIS.

- Training, validation, and testing data sets must meet particular “quality” criteria.

- Particular technical documentation must be drawn up and kept up to date.

- The HRAIS must technically allow for the automatic recording of events (logs).

- The HRAIS must be designed to be sufficiently transparent and must be accompanied by instructions for use for deployers containing detailed information prescribed by the AI Act.

- The HRAIS must be designed so it can be effectively overseen by individuals – including by incorporating appropriate human–machine interface tools.

- The HRAIS must be designed and developed to achieve an appropriate level of accuracy, robustness, and cybersecurity.

Providers must ensure the HRAIS’s compliance with these requirements, in addition to meeting further obligations. For example, providers of HRAIS must (1) have a quality management system in place; (2) keep certain documentation and logs; (3) ensure that the HRAIS undergoes the relevant conformity assessment procedure (before it is placed on the market or put into service); and (4) establish a post-market monitoring system. Providers are also obligated to report certain “serious incidents” or risks to health, safety, and fundamental rights to relevant market surveillance authorities.

By contrast, deployers of HRAIS have fewer obligations. They will be required, for example, to:

- Take appropriate measures to use the HRAIS in accordance with the provider’s instructions for use.

- Assign human oversight of the HRAIS’s use (to individuals who have the necessary competence, training, authority, and support).

- Ensure that input data (to the extent that the deployer has control over it) is “relevant and sufficiently representative in view of the intended purpose” of the HRAIS.

- Inform (as applicable) providers, importers, distributors, and relevant market surveillance authorities of serious incidents or of certain risks to health, safety, or fundamental rights involving the HRAIS.

- Use the instructions for use to comply with their obligation to carry out a data protection impact assessment under the EU General Data Protection Regulation (GDPR), if applicable.

- Inform individuals that they are subject to the use of an HRAIS if certain types of HRAIS are used to make or facilitate decisions that impact them.

- Respond to individuals exercising their “right to explanation of individual decision-making.”

Some deployers must also carry out a so-called “fundamental rights impact assessment” (FRIA) before they can start using an HRAIS. For example, banks may have to carry out a FRIA in connection with certain HRAIS used to evaluate individuals’ creditworthiness, and insurance companies may need to perform a FRIA if they use certain HRAIS for risk assessment and pricing purposes for life or health insurance.

6) What does the AI Act say about general-purpose AI models?

The AI Act contains specific provisions on general-purpose AI models that differ from those applicable to AI systems. Providers of general-purpose AI models are subject to a number of obligations, including requirements to:

- Draw up technical documentation containing particular information described by the AI Act and keep it up to date.

- Provide certain transparency information to (downstream) providers that intend to integrate the general-purpose AI model into an AI system.

- Put in place a policy to comply with EU copyright law and related rights (in particular to identify and comply with any reservation of rights expressed pursuant to the EU Copyright Directive).

- Publish a detailed summary of any training data used to “educate” the general-purpose AI model.

Further obligations apply to providers of general-purpose AI models that present systemic risk. These are general-purpose AI models with particularly high impact capabilities that can be propagated at scale across the value chain. This category is intended to cover only the most powerful general-purpose AI models that can have a significant impact on the EU market due to their reach or due to actual or reasonably foreseeable negative effects on public health, safety, public security, fundamental rights, or society as a whole. They are subject to additional obligations, including obligations to perform model evaluations using state-of-the-art methods, to report serious incidents involving the model, and to mitigate possible systemic risks.

7) What are the requirements that attach to limited-risk AI systems?

Various transparency or labelling obligations apply to AI systems that pose limited risk only, including:

- AI systems that interact directly with individuals (e.g., chatbots).

- AI systems that generate synthetic audio, images, video, or text.

- Emotion recognition AI systems or biometric categorization AI systems.

- AI systems that generate deepfakes.

- AI systems that generate and manipulate text to inform the public of matters of public interest.

For example, chatbots must be designed so that users are informed they are interacting with AI (unless it is obvious). AI systems that generate synthetic audio, images, video, or text must be designed so that outputs are marked in a machine-readable format and detectable as AI generated or manipulated.

8) What about AI systems that do not fall within the above categories?

Most AI systems will not fall within any of the above categories and are considered to present minimal risk. Such AI systems escape the application of most of the provisions of the AI Act, although the AI Act lays the groundwork for voluntary AI codes of conduct to foster the application of certain requirements relating HRAIS to other, less risky AI systems.

The AI Act also contains particular obligations requiring providers and deployers to ensure their staff and other persons dealing with AI systems on their behalf have a sufficient level of AI literacy. At least in principle, these obligations apply regardless of the level of risk the relevant AI systems represent.

9) Does the AI Act impact companies in the EU only?

No: the AI Act has explicit extraterritorial reach – meaning that it can apply to operators established or located in the United States, UK, and other non-EU countries – even if they do not have a presence in the EU. In particular, the AI Act impacts:

- Providers established or located outside the EU if they place an AI system or general- purpose AI model on the EU market or put it into service in the EU.

- Providers and deployers established or located outside the EU if the output produced by an AI system is used in the EU.

10) When do we have to comply?

The AI Act will not be fully operational from day one: different transition periods apply in order to give operators of AI systems time to comply with the new rules. For example (and subject to some nuances), the AI Act’s requirements relating to:

- Banned AI practices will apply from February 2, 2025.

- General-purpose AI models will apply from August 2, 2025.

- HRAIS will apply from August 2, 2026 or August 2, 2027, depending on the type of HRAIS.

The AI Act also contains specific rules that apply to providers’ and deployers’ provision and use of “legacy” AI systems and general-purpose AI models that have been placed on the market or put into service before the relevant transitional periods elapse.

11) What if we do not comply (or get it wrong)?

For AI systems, compliance monitoring and enforcement will primarily be in the hands of the market surveillance authorities at the EU Member State level. The European Commission will supervise compliance with the AI Act’s requirements on general-purpose AI models. Noncompliance can result in the relevant authority taking measures to restrict or prohibit market access or to ensure that the AI system is recalled or withdrawn from the market. Lack of compliance may also lead to administrative fines. For violations of most of the AI Act’s requirements, these fines can reach up to €15 million or up to 3% of the offender’s total worldwide annual revenues for the preceding financial year (whichever is higher).